1. CREATE cluster

Jupyter Connecting to Jupyter Lab through an SSH tunnel

- Start an interactive session

ssh -m hmac-sha2-512 k21116947@hpc.create.kcl.ac.uk |

Make a note of the node you’re connected to, e.g. erc-hpc-comp001

- Within this session, start Jupyter lab without the display on a specific port (here this is port 9998)

conda activate PhD |

- Open a separate connection to CREATE that connects to the node where Jupyter Lab is running using the port you specified earlier. (Problems known with VScode terminal)

ssh -m hmac-sha2-512 -o ProxyCommand="ssh -m hmac-sha2-512 -W %h:%p k21116947@bastion.er.kcl.ac.uk" -L 9998:erc-hpc-comp037:9998 k21116947@hpc.create.kcl.ac.uk |

SCP Transferring files

Download: use shift + right click to open PowerShell of the location, and use scp to copy files from the server to local, here is an example:

scp -o MACs=hmac-sha2-512 create:/users/k21116947/1.py /1/loc.py |

Upload:

scp -o MACs=hmac-sha2-512 1.py create:/users/k21116947/1.py |

If it is a folder:

scp -o MACs=hmac-sha2-512 -r create:/users/k21116947/ABCD/trail4 /trail |

What else, rm is used to delete files.

Submitting a job via sbatch

cd to the location, then use:

sbatch -p cpu helloworld.sh |

or

sbatch helloworld.sh |

Issue: SLURM Job Exits After a Few Seconds

Symptom

When submitting the sbatch run.sh job using SLURM, the job runs for only a few seconds before exiting. However, running bash run.sh directly works fine.

Cause Analysis

- Working Directory Issue:

- The default working directory for

sbatchmight be different from the expected one, causing files likeconfig.ymlandsearch_space.jsonto be inaccessible.

- The default working directory for

- Conda Environment Not Activated Properly:

- SLURM jobs do not automatically inherit environment variables from the interactive shell, which may result in

nnictlbeing unable to find Python and its dependencies.

- SLURM jobs do not automatically inherit environment variables from the interactive shell, which may result in

- NNI Task Running in the Background Without Blocking the Process:

nnictl create --config config.ymlstarts the NNI task, but sincerun.shcompletes execution immediately after, the SLURM job exits prematurely.

Solution

Modify run.sh as follows:

bash复制编辑#!/bin/bash |

This ensures:

- The correct working directory is used.

- The Conda environment is properly activated.

- The script does not exit prematurely, allowing NNI to run until the SLURM job times out or is manually stopped.

Issue: shell contain \n

sbatch: error: Batch script contains DOS line breaks (\r\n) |

Solution: dos2unix job.sh

Submit with nohup

It allows you to automatically submit your job in avoiding failure because of job numbers’ limitation.

nohup bash -c ' |

monitoring job

squeue -u k1234567 |

Setting working directory for the job

add the following commands in .sh file

|

Loading module

such as with TensorFlow:

module load python/3.11.6-gcc-13.2.0 |

Singularity

check BIDS:

singularity run -B /scratch/users/k21116947:/mnt docker://bids/validator /mnt/abcd-mproc-release5 |

ignore the motion.ts

Pipeline for running fmriprep in cluster

cd /scratch/users/k21116947 |

2. Jupyter

Install kernel for ipynb:

conda activate NN |

3. Quick command

nano can be used to edit file in command line

4. Python

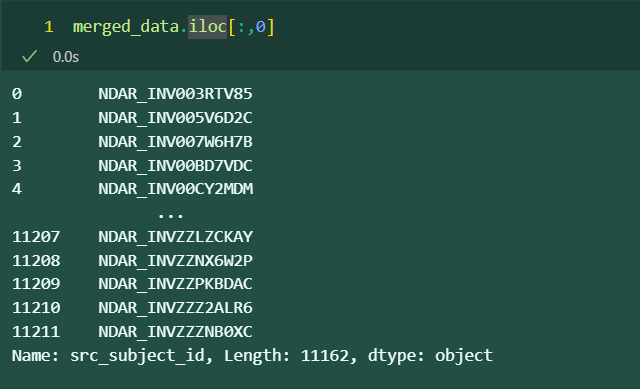

.iloc[] is used to list a dataframe, such as:

where : represent all rows, and 0 represent the first column

~ 是取反运算符:示例:对于 [True, False, False, True],应用 ~ 后变成 [False, True, True, False]

5. Github

上传时忽略文件:

在根目录下创建 .gitignore 文件,文件中添加要忽略的文件,如:

# 忽略单个文件 |

6. Graph

Python graph gallery contains some examples

if python does not work, you may turn to D3.js

7. Tips for ChatGPT

I’m writing a paper on 【topic】 for a leading 【discipline】 academic journal. What I tried to say in the following section is 【specific point】. Please rephrase it for clarity, coherence and conciseness, ensuring each paragraph flows into the next. Remove jargon. Use a professional tone